Exposure Management Has a Plumbing Problem

by Dan Pagel, CEO & Board Director//13 min read/

Here's a thought experiment: imagine your house has a leak. You call a plumber. The plumber shows up, runs a diagnostic, and tells you there are five leaks. Except there aren't five leaks. There's one leak, reported by five different sensors, each using its own naming convention. And nobody can tell you which wall it's behind because the building plans are from 2019 and the contractor who last touched it left the company.

Now imagine that's happening across 50,000 assets, 15 security tools, and six teams who all think someone else owns the problem. That's enterprise exposure management in 2026.

We've spent the past year talking to CISOs, security operations leaders, and the teams doing the actual work. The pain they describe isn't about detection anymore. Enterprises have invested heavily in visibility: scanners, cloud security, AppSec tools, threat intelligence feeds. The tools are there. The data is flowing. What's broken is everything that happens after the data lands.

And the data tells the same story. The “2025 Ponemon Cybersecurity Threat and Risk Management Report” found that 60% of breach victims were compromised through a known vulnerability where the patch existed but was never applied. Not because no one saw it, but because broken processes kept it from getting fixed. Palo Alto's Unit 42 report found that 13% of social engineering incidents traced back to alerts that were simply ignored. IDC research shows large enterprises ignore roughly 30% of their alerts entirely because they can't keep up with the volume. The average enterprise SOC processes over 11,000 alerts per day.

The problem isn't finding the needle in the haystack. It's that nobody can agree on how many haystacks there are, who owns each one, or whether the needle was already found last Tuesday by a different scanner.

That reality shaped everything we built in this release.

The problem isn't finding the needle in the haystack. It's that nobody can agree on how many haystacks there are, who owns each one, or whether the needle was already found last Tuesday by a different scanner.

The Two Problems Everyone Has. No One Has Solved.

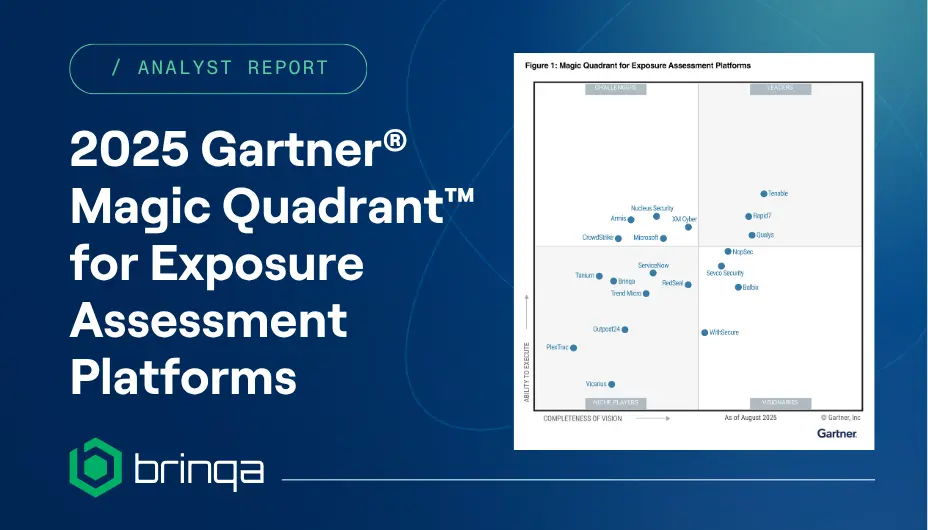

Deduplication and ownership attribution are the unglamorous underbelly of exposure management. They don't show up in analyst quadrant criteria. They don't make the keynote. But ask any practitioner what eats their week, and you'll hear some version of the same story.

Every enterprise running multiple scanners - which is every enterprise - deals with duplicate findings. A single vulnerability gets reported differently by each tool. Different taxonomies. Different severity ratings. Different identifiers. Security teams spend hours reconciling what is fundamentally the same issue, reported five different ways across three different tools. That's not security work. That's spreadsheet archaeology. And it's happening at massive scale.

Ownership is the same story, but arguably worse. Ponemon found that only 42% of organizations include an asset inventory program as part of their vulnerability management process, and just 39% assign asset inventories to both designated owners and ranked criticality. As a result, the majority of enterprises are attempting to run exposure management programs where ownership is unclear or fragmented from the start.

To make matters more complex, asset ownership and remediation responsibility are often not the same. A service or custom application may run on a server, that server may run in the cloud, and the resulting exposure may impact the business in ways that span multiple teams. Who owns the asset? Who owns the risk? Who is accountable for remediation? Without clear attribution, these questions create friction, delays, and missed outcomes, further compounding the inefficiency and cost of managing exposure at enterprise scale.

As Larry Ponemon put it in the Institute's research on vulnerability management: enterprises "have gaps around assessing the risk and getting full visibility across their IT assets," and that gap leads directly to low confidence in avoiding breaches. When ownership is unclear, remediation stalls. Accountability evaporates. SLAs become decorative.

These aren't edge cases or configuration problems you solve with a longer implementation. They're structural. And they hit every exposure management environment, regardless of maturity or tooling.

That Changes Today

Brinqa’s release yesterday introduces two AI-native agents that go directly at these problems: the AI Attribution Agent and the AI Deduplication Agent.

The AI Attribution Agent closes the ownership gap. When asset attributes like ownership, business unit, or environment classification are missing or stale, which, let's be honest, they almost always are, the agent infers them using machine learning models trained on patterns across your existing data. It doesn't guess blindly. It surfaces its reasoning, assigns a confidence score, tracks attribution sources, and keeps a human in the loop where it matters. You see exactly what the AI inferred, why it inferred it, and you decide whether to accept it. It learns from your feedback and gets sharper over time.

The AI Deduplication Agent consolidates duplicate findings across sources into a single, enriched record. Not through static rules or simple CVE matching, but through intelligent correlation that understands how different scanners describe the same vulnerability, even when the taxonomies don't align. The result is a dramatically cleaner picture of actual exposure. Fewer phantom findings inflating your risk numbers. Fewer conflicting tickets confusing remediation teams. Better metrics, because the numbers finally represent reality instead of scanner overlap.

Both capabilities apply to every exposure management environment. Day one value. No six-month configuration project. No expert-dependent setup required.

SmartFlows: The End of "We Need a Brinqa Expert for That"

Alongside these agents, this release introduces SmartFlows, a no-code, drag-and-drop automation engine for building policy-driven workflows.

If you've ever watched a security team try to modify an automation workflow and heard the words "we'll need to open a ticket with professional services for that," you understand the problem SmartFlows solves. Historically, automating exposure management workflows in complex environments has required deep platform expertise, custom code, and meaningful implementation time. SmartFlows changes that equation entirely.

Teams can now build and modify workflows visually, including filtering, routing, escalating, and acting on exposure conditions, without writing code. SmartFlows ships with dynamic rules libraries that incorporate Brinqa's expanding threat intelligence: exploitability signals, known exploited vulnerabilities, MITRE ATT&CK mappings, CPE context. Intelligence becomes default logic, not optional enrichment. Customers stand up sophisticated, intelligence-driven automation faster, with more guardrails, and without the risk of someone's custom script quietly breaking in production at 2 AM on a Friday.

Built for AI From the Ground Up

There's an important distinction between bolting AI features onto an existing platform and building an architecture that's fundamentally designed for an AI-native future. We chose the latter. And the difference matters more than most people realize.

The Brinqa platform has been modernized so that trusted data, AI-driven intelligence, and action work together as a unified system, not as separate layers stitched together after the fact. Every AI agent operates on top of BrinqaDL, our enterprise-grade exposure management data lake, which serves as the system of record and single source of truth for exposure data.

BrinqaDL is built on a conviction that runs counter to how most platforms operate: more data is better. And not just more data ingested, but more of that data actually used. Many platforms pull data from dozens of sources but then discard large portions of what comes in. They strip context, flatten relationships, limit retention, and deliver a watered-down version of the truth back to customers. We take the opposite approach. Every attribute, every relationship, every signal gets preserved, because that richness is exactly what powers intelligent deduplication, inference, and prioritization. You can't make great decisions on half the data.

BrinqaDL is also built for the long game. It stores and analyzes years of vulnerability, asset, and remediation data, giving security leaders the historical depth they need for trend analysis, longitudinal reporting, regulatory compliance, and strategic risk measurement. When a CISO needs to show the board how remediation velocity has improved over three years, or how exposure across a business unit has trended quarter over quarter, BrinqaDL makes that possible. No stitching together spreadsheets. No pulling from disconnected systems. One data layer, governed and queryable, with the full historical picture.

That data foundation is also what makes trust in AI possible at the enterprise level. This is where we've listened hardest to our customers and to security leaders across the industry. The message has been remarkably consistent: they want AI that helps them move faster. They do not want a black box.

So we built transparency into the framework, not as a feature toggle. Confidence scoring, explainability, and human-in-the-loop controls are architectural principles, not afterthoughts. When the platform infers an asset owner, you see the reasoning. When it consolidates findings, you can trace the logic. When it makes a recommendation, you can challenge it, override it, or approve it. That's not a limitation on AI. That's how you earn trust in AI, especially when the results are going in front of the board.

From Clean Data to Confident Decisions

Here's what all of this unlocks in practice:

When deduplication works, your risk metrics reflect reality instead of scanner noise. Executive reporting becomes defensible because the numbers represent actual exposure, not inflated finding counts. When you tell the board there are 5,000 critical findings, it means there are 5,000 critical findings, not 1,200 real ones echoed across four scanners.

When ownership is inferred reliably, remediation workflows actually execute. Tickets route to the right teams. SLAs can be measured and enforced. Accountability becomes operational instead of a slide in the quarterly review.

When automation is accessible through SmartFlows, teams that used to depend on custom code or expert consultants can build and adapt workflows themselves - faster, with guardrails, at scale.

And when all of this sits on a data layer that retains years of history, intelligence improves over time. You're not just reacting to today's scan. You're identifying patterns, measuring progress, and making decisions with the full picture. That's a fundamentally different operating model than what most enterprises are running today.

The entire downstream process, from prioritization through remediation to audit, depends on these things being right. That's where confidence comes from. Not from better dashboards. From knowing the data underneath is clean, deduplicated, attributed, trusted, and deep enough to tell the real story.

What Comes Next

This release includes BrinqaDL, our enterprise-grade exposure management data lake with years of historical retention and an AI-ready architecture; SmartFlows, our no-code drag-and-drop automation engine with intelligence-driven defaults; the AI Deduplication Agent; and the AI Attribute Inference Agent.

Coming in Q2: AI Exploit Agent for adaptive risk prioritization with calculated reachability, and AI Remediate Agent for adaptive remediation intelligence with campaign tracking. The foundation we're shipping today is what makes those capabilities possible - and powerful - when they arrive.

We've outlined the full details of what this release enables in yesterday's announcement.

Want to see Brinqa in action? Schedule time with a Brinqa Expert for a walkthrough of what's live and what's coming.